Key Concepts

Here are a few terms explained simply to help you understand the technologies and solutions used in this project:

- Distributed Cache: A system that stores frequently accessed data across multiple servers or locations to reduce backend load and improve performance during high traffic.

- Cloudflare Workers: A serverless platform that runs lightweight code at the edge (close to users), enabling fast, scalable handling of web requests without relying heavily on backend servers.

- Mutex Lock: A mechanism that ensures only one process performs a specific task at a time, preventing conflicts or overload in distributed systems.

Business Problem

The client’s backend infrastructure struggled to maintain uptime during high traffic periods. Their system faced significant issues that risked reliability and scalability:

- VPS Overload: A Windows VPS hosting part of the API had limited capacity and could handle only a finite number of requests before crashing.

- Revalidation Issues: Cloudflare’s caching system sent multiple simultaneous revalidation requests to the backend when the cache expired, overwhelming the VPS during traffic spikes.

- Limited Scalability: Upgrading the VPS was expensive and temporary, failing to address the root problem of handling high traffic efficiently.

These issues threatened the client’s ability to provide uninterrupted service and maintain a reliable user experience during peak traffic periods.

Our Solution

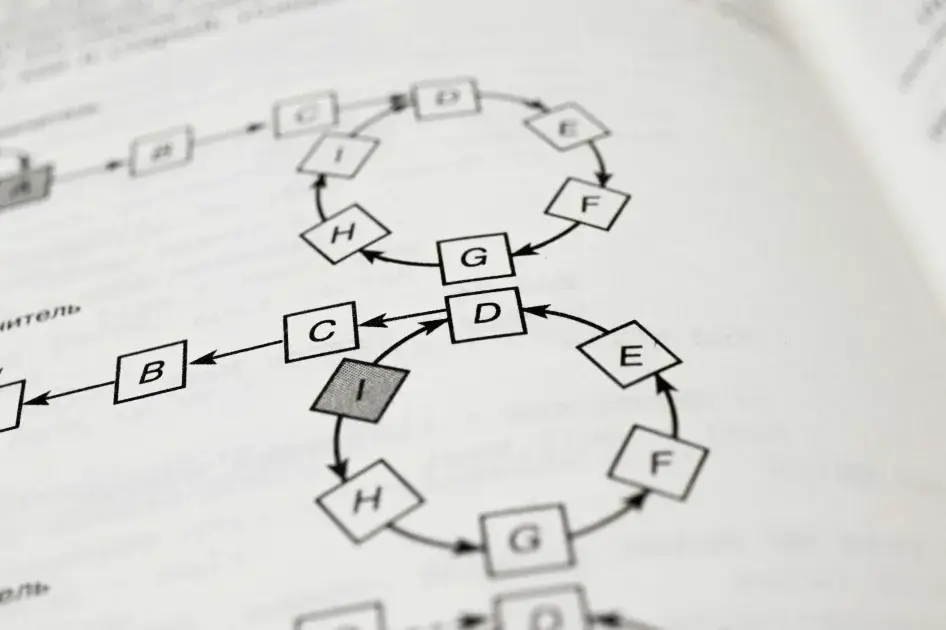

To resolve these challenges, we developed a custom distributed cache algorithm using Cloudflare Workers. This innovative solution tackled the root problem of VPS overload while ensuring scalability and reliability. Here’s what we delivered:

- Coordinated Revalidation: We implemented a mutex lock system to ensure that only one request triggered cache revalidation at a time. This eliminated the surge of simultaneous revalidation requests that had been crashing the VPS.

- Serving Stale Data: During revalidation or high-traffic periods, the system delivered stale cache entries to users when fresh data wasn’t immediately available. This ensured uninterrupted service without overloading the backend.

- Optimized Scalability: By routing traffic through Cloudflare and reducing backend dependency, the system could efficiently handle massive request volumes with minimal resource strain.

This solution provided a scalable, cost-effective architecture that prioritized uptime and reliability for the client’s operations.

Results

The implementation of the custom distributed cache algorithm delivered measurable improvements:

- 100% Uptime: The system maintained uninterrupted service, even during sudden traffic spikes, by eliminating backend crashes.

- Infinite Scalability: The system handled high traffic efficiently, routing requests through Cloudflare and limiting backend revalidation to a single request.

- Cost Savings: The client avoided the ongoing expense of upgrading the VPS, significantly reducing operational costs.

- Reliability First: By serving stale data during high-load periods, the solution prioritized availability and user experience over strict consistency.

This custom solution empowered the client with a highly scalable, cost-effective system tailored to their unique needs, ensuring both reliability and growth potential.